Which ZK proving languages are the most future proof?

Credits to 1k(x) for the header image.

A big thank you to Alessandro Coglio, Eric McCarthy & Evan Schott for their comments and feedback.

Over the last years, theory and tooling to generate zero-knowledge proofs have advanced massively. It is now possible to prove statements which are bigger and more complex, and we can do so faster than ever before.

Incredibly, it's now possible for developers to prove statements written in a high-level programming language such as C or Rust. Starting in 2013 with TinyRam, there are now several systems which are able to prove subsets of LLVM-compiled code out of the box, albeit with varying levels of production-readiness. Most of these systems target pre-existing Instruction Set Architectures (ISAs) such as RISC-V. Is this really the holy grail? And if so, should other more customized zkVMs and zkDSLs consider adjusting?

One of the heroes to help make a lot of progress possible, Justin Thaler, had a spicy answer to this question, which I'll quote in full:

What are the downsides of zkVMs targeting (ostensibly) SNARK-friendly ISAs rather than pre-existing ISAs like RISC-V?

In summary, zkVM projects insisting on the use of a custom DSL for efficient compilation to an ostensibly “SNARK-friendly” VM have chosen a path riddled with drawbacks. Each design decision is a mistake–a bad VM, an inefficient SNARK used to prove that VM, a new high-level language to ease compilation to the VM, and a new compiler to perform the compilation. Each mistake adds significant inefficiency and vulnerability to errors and bugs.

Let's clarify the claim on whether we should all be moving towards zkVMs with pre-existing ISAs.

Types of ZK languages

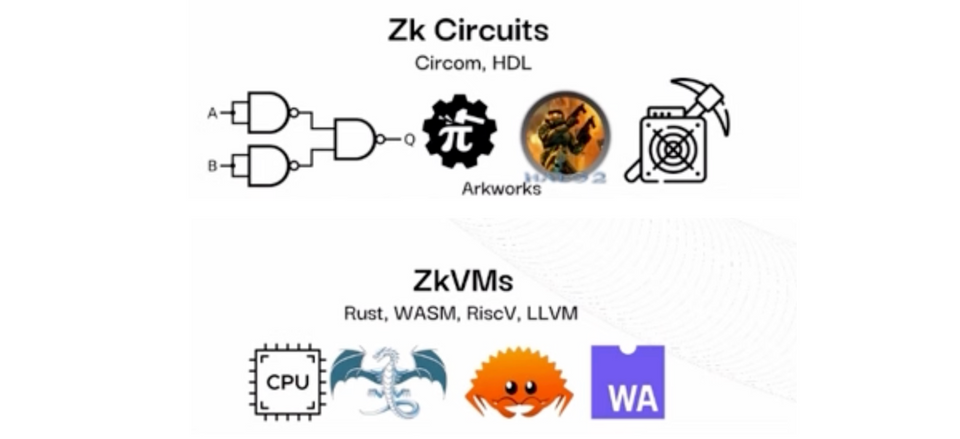

Let's start our investigation by distinguishing different approaches to zero-knowledge proof programming languages:

- Languages which compile each statement to a custom circuit. Examples are Circom, Leo, Noir and halo2.

- Languages which repeatedly invoke a circuit modelling a VM with a custom snark-friendly ISA. Examples are Lurk and Polygon Miden. The Powdr compiler stack also fits into this paradigm.

- Languages which repeatedly invoke a circuit modelling a VM with a pre-existing ISA. Examples are RISC-Zero, Valida, SP-1, Jolt, and zk-LLVM.

To get some clarity about when each approach makes more sense, let's discuss various common criteria, in order of how easy and objectively they are to answer:

Criterion 1: Proving overhead

For most proof systems, the prover is the bottleneck to the size and types of statements which can be proven. So, which language category offers the fastest proving time? I think it's useful to remember category 1 wins, but it also depends on what you want to prove.

Representing simple straight-line programs as a set of VM instructions adds significant proving overhead. Current state-of the art RISC-V zk-VMs are about 500,000 times slower than native execution. In contrast, when making proofs about highly uniform statements, it's possible to achieve proving overheads of only 180 times slower. Actually, scratch that, it's even possible to prove certain statements faster than it takes to compute them.

Most category 2 and 3 languages rely on various optimised sub-circuits, also frequently called "built-ins", which bring them back partially into category 1 land. And if most of what you're trying to prove consists of those built-ins, you might as well use a category 2 or 3 language. Moreover, if the program you're trying to prove contains control flow, category 2 and 3 languages can also be faster, because they natively allow for only proving those components which are executed.

For large general purpose computation, it is plausible that category 3 languages can be faster than category 2 languages. However, category 2 languages can still shine in the realm of proving cryptographic operations.

When combined with accumulation (e.g. Nova or Protostar), VM languages can certainly be "fast enough" for certain applications. As an additional benefit, by chopping up the computation into small pieces, prover space (memory usage) is extremely small.

Finally, ZK accelerating hardware is most likely to target VMs and standardized instruction sets, which will offer a 100x proving speedup. However, it will take many years before such hardware finds its way to consumer laptops and mobile phones.

Criterion 2: Developer tooling (for users of the ZK language)

For many use cases, category 3 wins. If one can use a language like Rust out of the box, with all the inherent tooling which exists for it, that is a big win. Moreover, we can make proofs about large amounts of already existing software programs, such as Rust EVM and Rust Tendermint packages. And as a corollary, any additional tooling which is built can be used by a large community.

It is important to note that the extensibility is also at odds with improved security. An important aspect of tooling is also to prevent developers from 'shooting themselves in the foot' and introducing security issues. And if security is your primary concern, you want control over the programming environment. Category 3 languages allow for importing arbitrary code and languages, and if developers are not careful, subtle bugs in those libraries could introduce vulnerabilities in your proof. This might be an acceptable risk in a low-stakes environment, but if we want proofs to protect and enforce the transmission of very large amounts of value, you'll always want to recommend and enforce the use of safe and potentially formally verified libraries, and mark the rest using some keyword such as unsafe.

Criterion 3: Developer tooling (for developers of the ZK language)

Independently from the other criteria, it is not clear to me which language category is easier to build.

Thaler mentions that a big advantage of category 3 languages is that its creators don't have to build their own compiler. However, this seems a bit misguided, because the majority of compiler logic of pre-existing languages is not relevant in the context of generating proofs.

Rust's most complex and useful feature - memory safety - is not by itself meaningful in the context of generating a proof. Neither is language syntax related to concurrency. Going one level lower, a significant portion of compiler middleware is dedicated to hardware-independent and hardware-dependent optimizations. While these optimizations are essential if you want to prove statements about Rust (see criterion 2), it would be silly for category 1 and category 2 languages to adopt these. Instead, they can implement more direct optimizations which lead to smaller circuits (see criterion 1).

Moreover, depending on your application, you might want to introduce syntax which existing languages don't support. Leo's main purpose is to generate proofs on the Aleo cryptocurrency network, and its async await syntax explicitly models moving data between an on-chain and off-chain environment. Another great example of a language with useful novel syntax is Lurk, which has first-class support for functional commitments. You should really check out their FAQ.

Finally, we should evaluate how easy it is to ensure that the language is secure. Thaler mentions the following:

All programs used to secure anything of value should ultimately be formally verified for correctness, and just as custom ISAs require new compilers, custom DSLs will require new verification tooling.

Indeed, formally verifying R1CS translation of opcodes in the category 1 Leo compiler in ACL2 was a significant undertaking of a few person-months. So this particular aspect might seem easier for category 2 and 3 languages, because at first sight there is only one circuit.

However, building a formally verifying compiler of more or less any kind seems comparable in effort. Complexity Has to Live Somewhere, and category 2 and 3 languages "push" the complexity upwards. Auditing category 2 and 3 languages requires knowledge of possibly complex VM architecture. Moreover, as mentioned above, they rely on various optimized sub-circuits or built-ins, which individually bring us back into category 1 land. To make matters more complex, these sub-circuits might require explicit analysis of compiler and cryptography logic (e.g. handling of shared memory between the VM and sub-circuits). Any formal verification is therefore likely to take a compositional approach.

And whereas a category 2 language might have a simpler VM abstraction, a category 3 language might benefit from pre-existing tooling contributing to the formal verification.

Conclusion

There are different approaches and many trade-offs when it comes to language design. In the context of ZK languages, I believe clear differentiators are their proving speed, and whether or not they allow you to prove statements for the application which you care about.

It might seem tempting to think it is exceedingly useful to be able to prove statements about existing software with only minor modifications. And indeed, it is awesome. However, this by itself is not a reason for all projects to migrate to proving RISC-V cycles, similar to how the world has not settled on a single programming language. Time will tell which approaches gain the most adoption. Verifiable software is here to stay, and I can't wait to see them deployed on a large scale.